Imagine trying to steer a boat through a raging storm, not by turning a wheel, but by subtly altering the very currents around it. This is the kind of challenge that fascinates fluid dynamicists, and it’s a challenge that researchers at the University of Campinas, in collaboration with the Illinois Institute of Technology, are now tackling with the power of artificial intelligence. Their recent work, published in Physical Review Fluids, unveils a methodology that uses neural networks to not only understand but also control complex, chaotic fluid systems – a feat that could improve from aircraft design to industrial processes.

For centuries, understanding fluid dynamics – the science of how liquids and gases move – has been one of the most formidable problems in physics. From the gentle ripple of a pond to the destructive power of a hurricane, fluid behavior is often incredibly complex and unpredictable, characterized by phenomena like turbulence, where eddies and swirls create a chaotic dance. This inherent nonlinearity makes these systems notoriously difficult to model and even harder to control. Traditional approaches often rely on simplified equations or computationally intensive simulations, which can fall short when faced with the real-world intricacies of fluid flow.

Enter neural networks, the computational workhorses behind many of today’s AI breakthroughs. These intricate networks, inspired by the structure of the human brain, are exceptionally good at finding hidden patterns and relationships within vast datasets. Until recently, their application in the precise world of fluid control has been somewhat limited. However, the team of Tarcísio C. Déda and William R. Wolf from the University of Campinas, alongside Scott T. M. Dawson from the Illinois Institute of Technology, has developed a novel framework that unleashes the full potential of AI in this challenging domain.

Their methodology centers on a two-pronged approach. First, they train neural networks to act as “surrogate models” of the fluid system itself. Think of these as ultra-fast, highly accurate digital twins of the real-world flow. Unlike traditional simulations that crunch numbers based on physical laws, these neural network surrogate models (NNSMs) learn the dynamics by observing the system’s behavior. They are fed data on how the fluid moves under various conditions, and through a process called “back-propagation” (a technique similar to how a human brain refines its understanding), they learn to predict future states of the system and even estimate its stable equilibrium points – the “sweet spots” where the flow settles down.

The true innovation, however, lies in the second part of their approach: leveraging these NNSMs to train a second neural network, one specifically designed to act as a closed-loop controller. Imagine a smart thermostat, but instead of regulating temperature, it’s constantly adjusting tiny “actuators” – like miniature fans or pumps – to subtly influence the fluid and guide it towards a desired, stable state. This neural network controller learns to do exactly that, not through explicit programming, but by interacting with the NNSM in a recurrent, iterative training process.

This iterative training is key to overcoming a significant hurdle in controlling chaotic systems: the lack of data around equilibrium points. When a system is far from stable, there’s plenty of chaotic data to learn from. But as it approaches a desired stable state, the available data shrinks, making it difficult for the AI to fine-tune its control. The researchers addressed this by cleverly cycling through phases of combined random “open-loop” actuation (where the system is intentionally perturbed to generate more data) and “closed-loop” control (where the AI actively tries to stabilize it). This continuous interplay ensures that the models improve their accuracy in the most critical region for achieving stabilization.

To demonstrate the robustness of their methodology, the team put it to the test on four distinct fluid systems, each presenting unique challenges. The first, the Lorenz system, is a classic simplified model of atmospheric convection, famous for its “butterfly effect” – tiny changes leading to massive, unpredictable outcomes. The AI successfully navigated its chaotic dynamics, finding and stabilizing its equilibrium points.

Next, they tackled a modified version of the Kuramoto-Sivashinsky equation, a model often used to describe phenomena like flame propagation and thin film flows, known for its complex spatiotemporal chaos. Here, the neural network controller demonstrated its ability to not just stabilize the system but also to find and maintain a desired, less chaotic state.

The methodology was then applied to more practical scenarios. A “streamwise-periodic two-dimensional channel flow” mimicked the flow of fluid through a pipe or channel, a fundamental problem in engineering. The AI learned to suppress instabilities and maintain a steady flow, showcasing its potential for optimizing industrial processes.

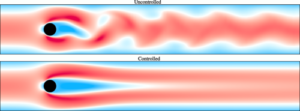

Finally, the researchers challenged their AI with a “confined cylinder flow,” a scenario relevant to many real-world applications, such as the flow around aircraft wings or within internal combustion engines. This system presents significant challenges due to the presence of vortices and shedding phenomena. Yet, the neural network controller proved capable of actively managing these complex flow patterns, demonstrating its ability to handle intricate geometries and dynamic interactions.

One of the significant advantages of this neural network-based approach is its computational efficiency. Once trained, these AI models can predict and control fluid behavior far more rapidly than traditional simulation methods, which often require immense computational power. This speed is crucial for real-time applications where rapid decision-making is paramount. Imagine an aircraft wing that can instantly adjust its shape in response to turbulent air, or a pipeline that can dynamically optimize its flow for maximum efficiency.

Furthermore, the methodology incorporates a technique called L1 regularization, which essentially encourages the neural networks to simplify their internal connections, making them more “sparse.” This not only improves their efficiency but also makes them more interpretable, allowing researchers to gain insights into how the AI is making its control decisions. This is a crucial step towards building trust and understanding in AI-driven systems.

The implications of this research are far-reaching. In aerospace engineering, this could lead to more stable and efficient aircraft, capable of autonomously adapting to changing atmospheric conditions and minimizing drag. In industrial settings, it could revolutionize process control, optimizing flows in chemical reactors, pipelines, and manufacturing systems, leading to reduced energy consumption and improved product quality. For environmental applications, it could aid in the design of more efficient water treatment plants or strategies for mitigating pollutant dispersion.

Beyond these immediate applications, this work opens up new avenues for fundamental research in fluid dynamics. By observing how the neural networks learn to control these complex systems, scientists can gain deeper insights into the underlying physical principles governing fluid behavior. It’s a powerful feedback loop: AI helps us understand fluids, and that understanding, in turn, helps us build even smarter AI.

The team from the University of Campinas and Illinois Institute of Technology has not only pushed the boundaries of what’s possible with artificial intelligence in fluid control but has also laid the groundwork for a future where intelligent systems can actively shape and optimize the flow of matter around us. From taming turbulent winds to refining industrial processes, the ability to control the unseen dance of fluids promises a new era of efficiency, stability, and innovation. The breeze, it seems, is finally learning to be baffled.